Note

This is the first article in a series regarding NDepend, a code quality and static analysis tool authored by Patrick Smacchia.

Some time ago I was fortunate enough to be granted a license of NDepend, a tool which has now achieved some level of fame as the de facto standard for assessing and monitoring the quality of a .NET source base. Of course, the moment you mention the word quality in the software world, you're met with a barrage of contention around the means one should use to characterise quality.

I had long known that Patrick Smacchia's brainchild offered a pretty exhaustive set of metrics to measure code by—largely the standard ones recognised by the literature (but sadly, not necessarily widely applied in the industry). LoC, cycolmatic complexity, cohesion and coupling are just a few of them, but there are many more, and the debate around the commensurate value of the metric increases as the index in question becomes more obscure.

Giving NDepend a spin for the first time, I thought that I'd run it against something very simple. Some time ago, I started making a habit of doing a daily code kata, concentrating on String Calculator —my first attempt took about forty minutes and now I manage to complete the full kata in around 15 minutes. With an oft-repeated piece of code which is effectively now committed to muscle memory (with the occasional syntactic deviation to revive the grey matter), how would the result be sized up by NDepend?

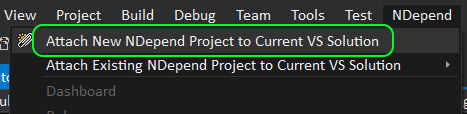

Since NDepend, once installed, can be driven directly from within Visual Studio (I'm using 2017), getting a full analysis done is quite straightforward, i.e.,

- Open the solution from within VS 2017;

- Elect to create a new NDepend project and attach it to the current solution.

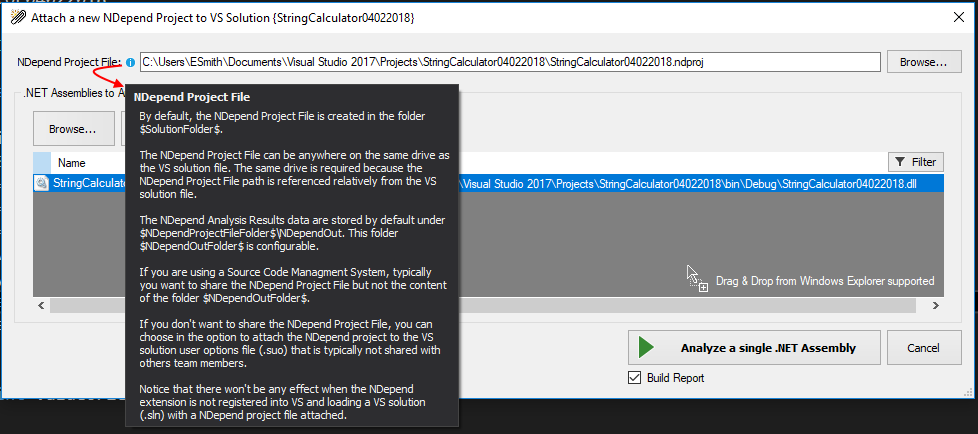

Doing so results in a project creation dialogue box being popped up. Immediately my appreciation for the discoverability of this program increases. That is: a common question that I have in the back of my mind is where things are being created and located on the filesystem. The location of the NDepend project is clear; not only that, but hovering over the information symbol provides some detailed documentation about how NDepend treats project file locations, even going so far as to mention some "under the hood" stuff like why it's important that the NDepend project file be on the same drive as the VS solution file. This is software that respects the intellect and diligence of its user, unfortunately a bit of a rarity nowadays. Of course, the fact that the target user base is particularly niche doesn't escape me; never-the-less, I dare say this is still unusual, especially in Microsoft-land.

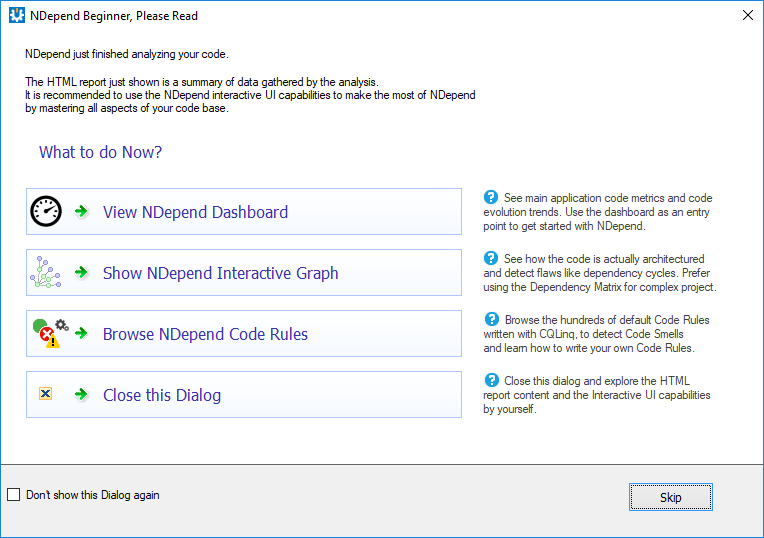

After clicking the "Analyse" button, an analysis progress tab is opened and the process takes about 13 seconds to complete on my, admittedly slightly resource burdened laptop. Once again, I'm gently guided through the process by means of a "What next, NDepend beginner?" dialogue box (you can elect to promote yourself to journeyman thus avoiding these training wheels by checking a box in the lower left corner).

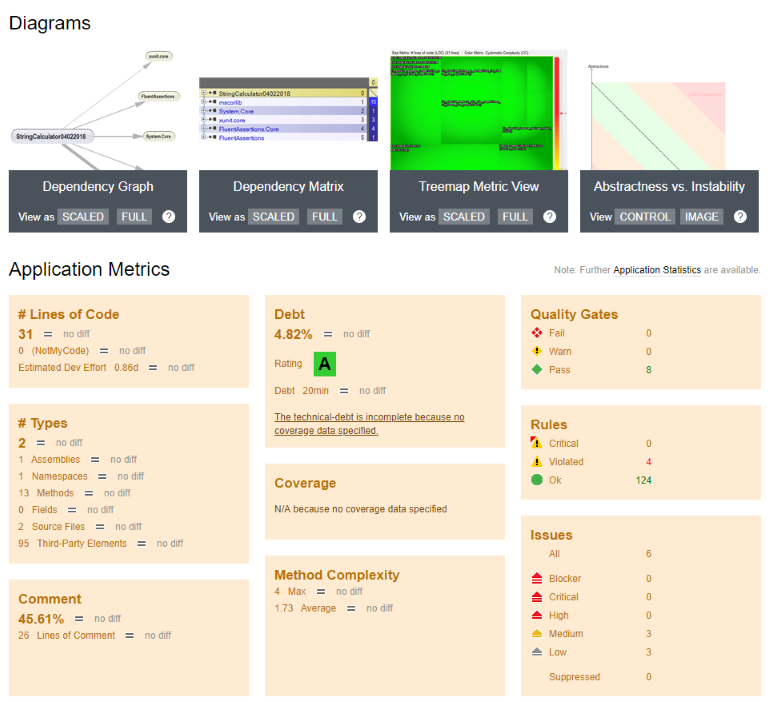

At the same time, my browser has been fired up and is pointing at a summary HTML report.

Despite having the adjective "summary" applied to it, the HTML report is, none-the-less, a cornucopia of graphs, colourful charts and metrics.

The image above is only a portion of the report; it carries on for some time further on in the browser.

For the purposes of keeping things focussed, I'm going to dip my big toe in with something that's a little more familiar to me presently, namely: technical debt. I'm going to allow myself some time to cover this tool over coming blog posts as it's clearly big.

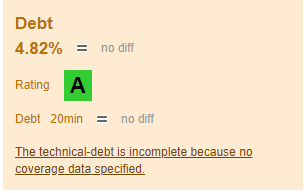

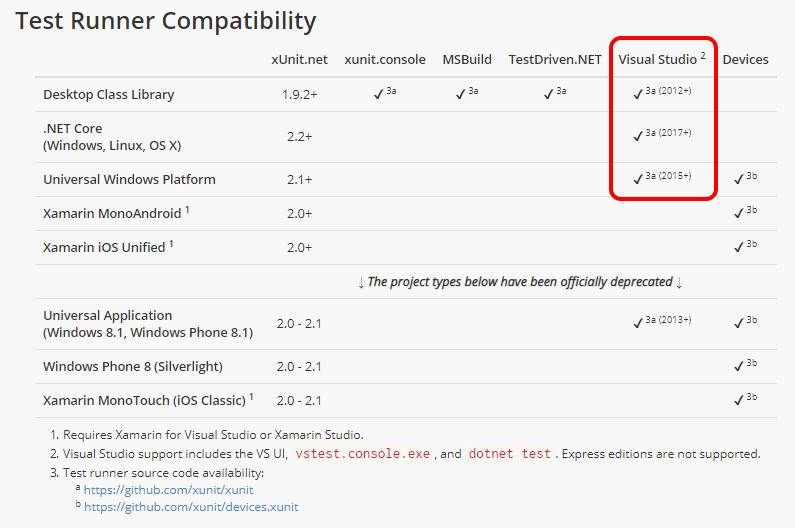

What I found interesting is the fact that the technical debt estimate is deemed incomplete due to the fact that there's no coverage data specified. The string calculator kata is a TDD-driven kata, so I'm expecting a very high degree of coverage. Clicking through on the link provided leads to more detail located on the NDepend site, and it becomes clear that I'll need an intermediate tool to leverage the coverage side of things. One of the options is the output of JetBrains DotCover; I've already made a substantial personal investment in JetBrains Resharper, and disappointingly, I see that DotCover is an additional cost over and above the Ultimate edition of Resharper. Fortunately one of the other options is the built-in coverage facility provided by Visual Studio, but using VS coverage would, no doubt, require the use of the VS test runner and I am dubious about the support of xUnit by the same. I quick lean on Google reveals a useful table:

The next step will be to get coverage going on this little kata in order to complete the technical debt assessment. That will involve getting Visual Studio to do its coverage thing (should be relatively straightforward) as adevertised on the xUnit site, or getting something else to do it. Code coverage, of course, is a very important metric, but one that can only really be appreciated once the value of automated testing has sunk in.

Taking NDepend for its first run has been a pleasant experience—this is the sort of software that I enjoy working with; clearly it has been built for developers by developers. I'm looking forward to digging deeper in future posts.

Comments !